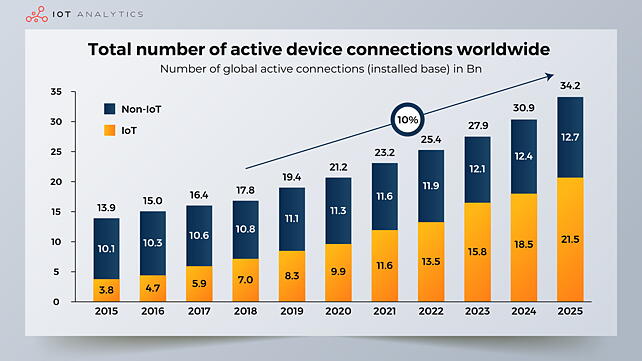

The total number of active device connections worldwide has been growing YoY with IOT devices showing 2.6X growth from 2015 to 2020 and 2.1X growth estimated between 2020 and 2025, as can be seen in Figure 1 [1]. The connected vehicles are expected to account for about 96% of the total vehicle sales worldwide in 2030 from about 48% share in 2020.

The billions of IoT devices need to be designed and developed and their associated data need to be tested using specific test cases associated with their industries or applications. This is a mammoth task requiring high bandwidth and low latency to support real-time IoT services.

The current solution of distributed IoT architecture based on a centralised platform in the cloud will increasingly find it difficult to provide real-time services based on massive data processing and will impede the scaling up of the central IoT platform. Recent research has investigated offering solutions based on new technologies such as Multiaccess Edge Computing (MEC) and Artificial Intelligence/Machine Learning (AI/ML) algorithms for more efficient data management and improved real-time service [2], [3], [4], [5].

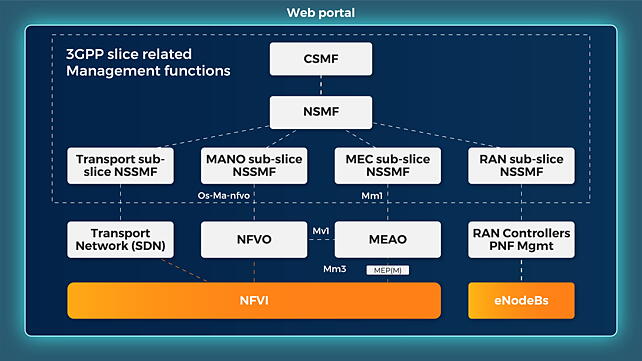

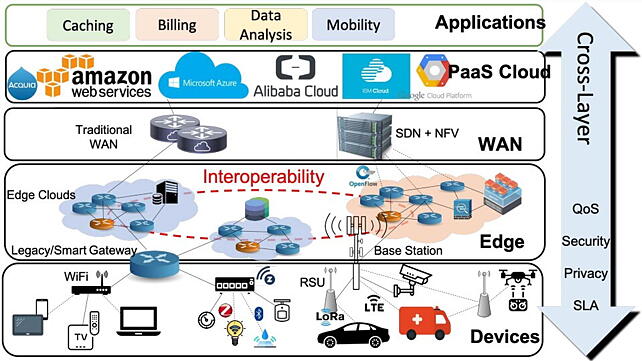

The advent of 5G networks and the new Network Slicing (NS) technology allow catering to multiple IoT service requirements using the existing network infrastructure. The new techniques of Network Function Virtualisation (NFV) and Software-Defined Networking (SDN) associated with 5G networks allow the implementation of flexible and scalable network slices on top of a physical network infrastructure.

The integration of SDN and NFV enables increased throughput and the optimal use of network resources, which was hitherto impossible even with SDN or NFV applied individually. To realise the benefits of improved throughput and latency through the integration of SDN and NFV with network slicing on physical network infrastructure, the application server must be located at the edge node instead of a far-away location from the central cloud to have reduced Round-Trip Time (RTT) for the messages from IoT devices to the server and back.

In addition to having an architecture virtualising the IoT common service functions and deploying them to an edge node, IoT resources and the associated services must also be delivered at the edge nodes. In scenarios where IoT services are running in the cloud-based IoT platform, despite IoT common service functions supported at the edge nodes, the message/data traffic from IoT sensors still needs to traverse back and forth to the IoT platform, thus increasing the latency and reducing the overall performance efficiency.

Hence, it is important for IoT platforms running in the cloud to create and manage multiple virtual IoT services catering only to the necessary common service functions. Also, an important requirement for the edge nodes is that they should handle network slicing capabilities and serve the virtualised IoT service functions in addition to the resources representing IoT data and services.

To completely leverage the advantage of 5G networks, SDN, and NFV, the architecture must be designed to avoid network traffic traversing up and down from an IoT sensor or device to the application in the cloud. The cloud-based IoT platform must be designed to provide a common set of service functions such as registration and data management while edge computing at the edge nodes must cater to local data acquisition from the sensors, data processing, and decision-making.

In specific, IoT or IoV use cases requiring low latency (< 1 ms) and mission-critical services such as in industrial applications or connected vehicles, even if the networks are deployed closer to the end-users using SDN/NFV, it may not be possible to reduce the RTT for the messages from an end IoT device or application to the central cloud platform.

In recent research, a novel IoT architecture was proposed with two distinct features: (1) the virtualisation of an IoT platform with minimum functions to support specific IoT services, and (2) hosting of the IoT instance in an edge node close to the end-user.

In this architecture, low latency and high IoT service management at the edge node are assured because the message/data traffic for the end-user need not traverse back and forth to the cloud and the IoT instance provides its service at the edge node, which is co-located with the MEC node with network slicing. Studies showed that the data transmission time in the new IoT architecture is halved compared to the conventional cloud-based IoT platform.

Recent developments in connected vehicles include the successful adaptation of 5G networks and SDN/NFV to provide Ultra-Reliable Low Latency Communications (URLLC). This is supplemented by MEC, which enables vehicles to obtain network resources and computing capability in addition to meeting the ever-increasing vehicular service requirements.

In IoV architecture, the MEC servers are typically co-located with Roadside Units (RSUs) to provide computing and storage capabilities at the edge of the vehicular networks. The communications between the vehicles and the RSUs are enabled by small cell networks. An access point (AP) is provided at the local 5G base station (BS) where the edge servers are typically co-located. The vehicles or end-users can access the services through the AP at the BS through the edge server located nearby, which improves the latency for the IoT devices compared to the communication with a remote IoV cloud platform.

Network Slicing In Connected Vehicles

The increased computing power available within modern vehicles helps carry out numerous in-vehicle applications. However, specific requirements such as autonomous driving capabilities and new applications such as dynamic traffic guidance, human-vehicle dynamic interaction, and road/weather-based augmented reality (AR) demand powerful computing capability, massive data storage/transfer/processing, and low latency for faster decision-making. Recent research has provided a new IoT architecture comprising two core concepts: network slicing (NS) and task offloading.

Network slicing divides a physical network into a dedicated and logically divided network instance for providing service to the end-users. The NS and MEC architecture in a 5G environment, as proposed in 3GPP standards, is shown in Figure 2 [6]. Through the NS technology that comprises SDN and NFV, physical network infrastructure can be shared to provide flexible and dynamic virtual networks that can be tailored to provide specific services or applications. NS adopts NFV and enables the division of IoT/IoV services by functionality to offer flexibility and granular details for the execution of functions at the edge nodes.

Each NS is assured of the availability of network resources such as virtualised server resources and virtualised network resources. NS is visualised as a disparate and self-contained network in the context of connected vehicles as outlined in the 3GPP standards, to provide specific network capabilities akin to a regular, physical network.

Each NS is isolated from other slices to ensure that errors or failures that may occur in a specific network slice do not impact the communication of other network slices. From the vehicle-to-everything (V2X) perspective, NS helps to come out with specific use cases such as cooperative manoeuvring, autonomous capabilities, remote driving, and enhanced safety.

Some of these test cases have conflicting and diverse requirements related to high-end computing, latency, dynamic data storage and processing, throughput, and reliability. Using NS, one or more network slices can be designed and bundled to support multiple conflicting V2X requirements and concurrently provide Quality of Service (QoS).

Task Offloading in Connected Vehicles

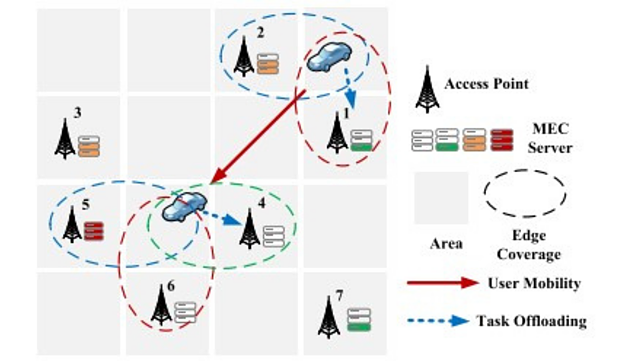

A schematic of dynamic task offloading (TO) in vehicular edge computing is shown in Figure 3 [7], which contains multiple network access points around the moving vehicles. As the vehicle moves from the top right corner to the new position in the direction indicated by the arrow, the task units (TUs) are initially offloaded to the edge server numbered 1, close to the initial position of the car.

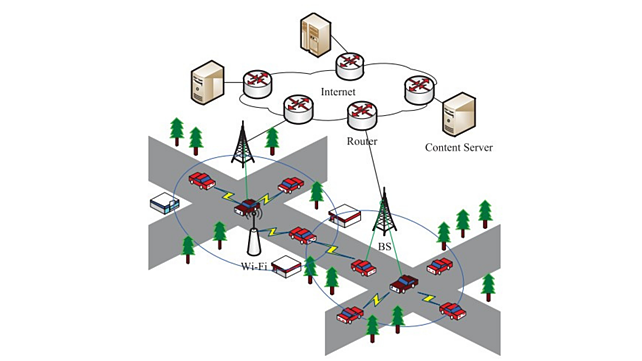

To maintain the QoS during the movement of the vehicle, the unfinished TUs need to be offloaded to a new edge server (either 4 or 5 or 6) close to the new position of the car. A similar concept of data offloading integrated with cellular networks and vehicular ad hoc networks is shown in Figure 4 [8].

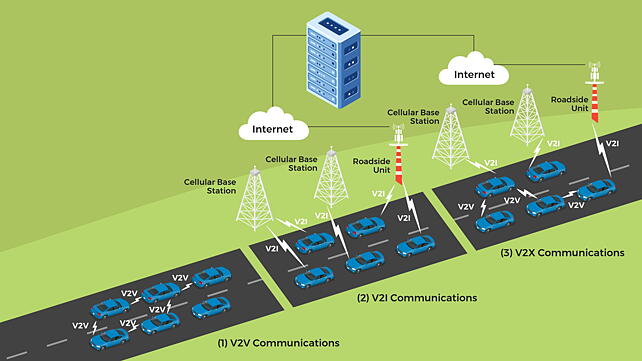

Recent studies propose the concept of IoT/IoV task offloading along with NS to provide similar quality of services at the edge nodes by creating IoT/IoV resources that are typically operated in the central cloud platform. As shown in Figure 5 [8], multiple technologies have evolved in V2X communications on TO and load reduction in wireless communication networks.

TO is segregated into three categories: (1) through V2V communications, (2) through V2I communications or (3) through V2X communications (a hybrid of multiple methods).

Through the deployment of both IoT/IoV service slice and 5G network slice at the same edge node close to the end-users, IoT/IoV functions and services can be provided seamlessly and efficiently with significantly reduced latency time (up to half the earlier value) for data or message transmission.

For carrying out increasingly complex and powerful computations requiring large data storage resources in modern vehicular communication networks, edge computing nodes hosted at wireless 5G new generation nodes (gNBs) or RSUs are utilised with NS and resource-optimal load balancing concepts duly applied. This concept uses the NFV framework to manage the data, balances the loads between various slices per node, and supports multiple edge computing nodes, resulting in savings of up to 48% in resources.

Edge Computing

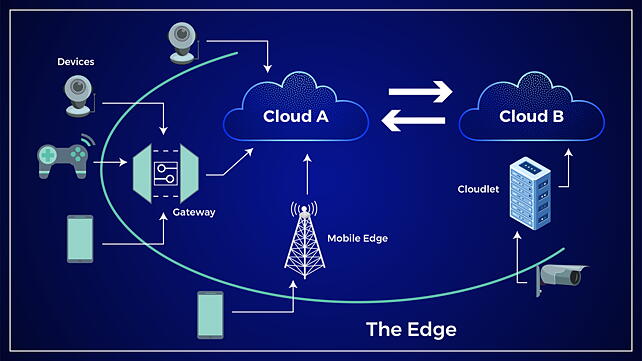

In a cyber-physical system (CPS), the edge is likely to be the system to which IoT devices are connected. An infrastructure able to support different kinds of edge applications (given the above definition of the Edge), might be quite complex. A representative complex cloud infrastructure is shown in Figure 6 [3] with heterogeneous varieties of IoT devices, multiple clouds, gateways, mobile base stations, and cloudlets.

To realise the benefits of Edge Computing and leverage the ultra-low latency and ultra-high reliability of 5G networks with little impact on security and data privacy, it is imperative to move the physical infrastructure elements closer to where the data needs to be processed.

The value chain in edge computing is being transformed into a value network through enhanced connectivity and 5G network availability aided by new applications and services such as AI, IoT, and IoV, and the demand to provide service and data/messages in real-time with low latency and ultra-high reliability.

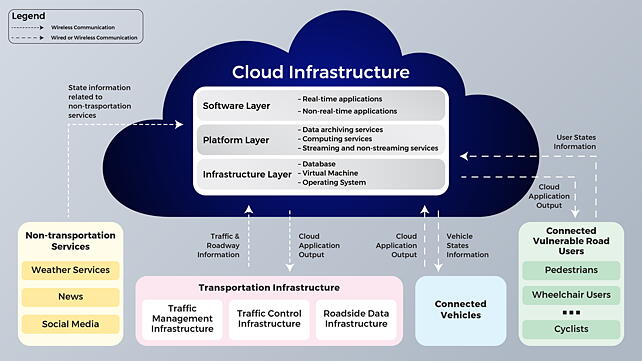

A cloud-based architecture for connected vehicles is shown in Figure 7 [9] that combines both wired and wireless communications and presents the integrated cloud services connecting non-transportation units (NTUs), traffic management and control, roadside infrastructure, connected vehicles, and vulnerable road users (VRUs).

As the number of connected vehicles increases dramatically in the next decade with an increasing amount of data such as telematics, infotainment, location-based services, etc., it puts enormous strain on the underlying mobile networks, justifying the combination of 5G networks and MEC. Embedded edge computing in the vehicles is the need of the hour as it provides a framework for an increasing number of connected vehicles and associated large data transfer and processing in the existing networks.

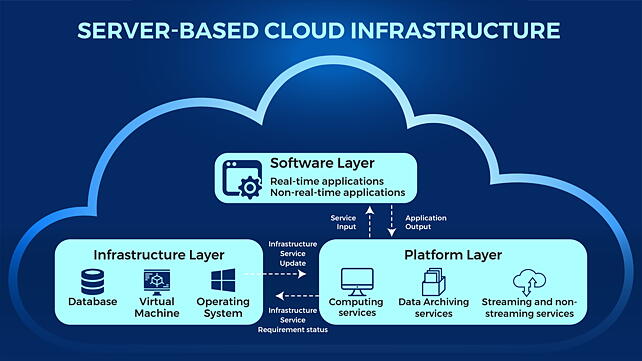

For a cost-effective solution, the commercial cloud and the associated hardware and software provide a viable option. The cloud architecture shown in Figure 7 comprises an infrastructure layer, a platform layer, and a software layer [9]. The bottom infrastructure layer provides infrastructure-as-a-service (IaaS) to the cloud users and application developers in the form of server instances (virtual machines) through hardware components besides database resources and operating systems.

The middle platform layer provides a worry-free underlying infrastructure and forms the basis for platform-as-a-service (PaaS) by enabling developers to build applications using multiple cloud services including streaming, and non-streaming, computing, and database management.

The top software layer provides the software-as-a-service (SaaS) to the developers who can upload the data from NTUs or transportation infrastructure or connected vehicles or VRUs (Figure 7), deploy their applications in the cloud, and monitor/analyse the output from the applications running in the cloud.

Depending upon the needs of connected vehicle applications and the requirements, the cloud-based architecture shown in Figure 7 can operate in real-time or non-real-time. The cloud services must meet the QoS agreements through compliance with the computing and latency requirements. Besides, for efficient real-time cloud services of connected vehicles, the components of the architecture must meet the requirements related to real-time cloud computing, real-time data transmission, and data archiving. A reference edge computing platform consisting of a device layer, edge cloud layer, network layer, back-end layer, and application layer is shown in Figure 8 [10].

Real-Time Cloud Computing

Real-time cloud computing in a cloud-based connected vehicles application involves multiple activities such as data acquisition from IoT devices, data uploading from the database, data processing, and message/data downloading while meeting the latency requirements. A server-based architecture mandates application developers to establish a cloud server instance to carry out their computing needs. This in turn demands significant expertise, cost, and time for hardware set-up and virtual environment configuration.

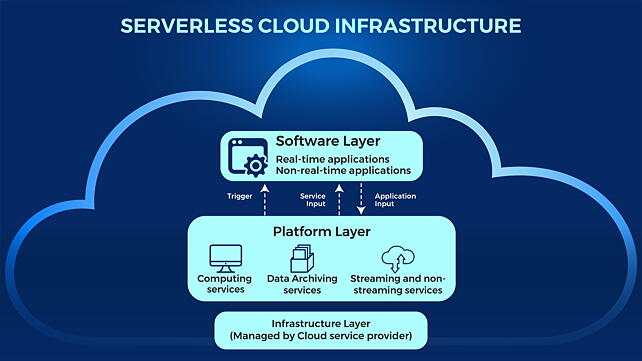

Additionally, this arrangement is not conducive to the dynamic scaling of computing resources to meet the varying demands. To meet these challenges, a new “serverless cloud computing architecture” has emerged that not only provides relief to the application developers from the burden of setting up or configuring the hardware and virtual environment and gives them the opportunity to exclusively focus on application development but also scales up dynamically to allocate resources for application development with no additional requests or intervention from the developers.

As serverless architecture is not burdened with the cumbersome, expensive, and time-consuming establishment and maintenance of server instances, it quickly evolved into a cost-effective alternative to server-based computing.

The details of and important differences between server-based and serverless cloud computing are provided next. The cloud infrastructure shown in Figure 9 can be segregated into two types: server-based as shown in Figure 9 (a) or serverless as shown in Figure 9 (b).

Server-Based Cloud Computing

For a real-time or a non-real-time cloud computing application, developers need to establish a cloud server instance, in the commercial cloud, to begin with, as shown in Figure 9 (a) [9]. For a given application, the server instance calls for the configuration of dedicated or virtualised hardware (e.g., CPU, storage, memory), operating systems (OS), and software coding platforms (e.g., language environment, compilers, libraries).

The Application Programming Interface (API) helps the applications interact with other cloud services, to carry out different functionalities. Though it is possible for the developers to customise the computing capabilities to meet specific demands, they are required to be experts in configuring and maintaining server instances and spend a sizeable amount of time and effort on this. Further, the potential wastage of computing capacity is high in the case of server-based cloud computing if specific applications need fewer resources because it is not scalable.

Serverless Cloud Computing

In the case of serverless cloud computing, as shown in Figure 9 (b) [9], the application developers, (a) are not required to establish server instances, (b) can focus solely on application development, and (c) are relieved of the burden of creating, configuring, maintaining, and operating the server instances.

This architecture supports multiple programming languages such as Python, .NET, Java, and Node.JS. The applications in serverless cloud computing are typically configured to get activated automatically based on specific triggers such as input data availability, database update service, or actions of other cloud services and are active till the task is completed.

Upon task completion, the commercial cloud automatically releases the computing resources, to enable dynamic scaling depending on varying loads. This results in a more efficient and cost-effective cloud architecture with additional savings on time and effort.

Function as a Service (FaaS), also known as serverless computing, allows developers to upload and execute code in the cloud easily without managing servers and by making hardware an abstraction layer in cloud computing [11], [12], [13]. FaaS computing enables developers to deploy several short functions with clearly defined tasks and outputs and not worry about deploying and managing software stacks on the cloud.

The key characteristics of FaaS include resource elasticity, zero ops, and pay-as-you-use. Serverless cloud computing frees the developers from the responsibility of server maintenance and provides event-driven distributed applications that can use a set of existing cloud services directly from their application such as cloud databases (Firebase or DynamoDB), messaging systems (Google Cloud Pub/Sub), and notification services (Amazon SNS).

Serverless computing also enables the execution of custom application code in the background using special cloud functions such as AWS Lambda, Google Cloud Function (GCF), or Microsoft Azure Functions. Compared with IaaS (Infrastructure-as-a-Service), serverless computing offers a higher level of abstraction and hence promises to deliver higher developer productivity and lower operating costs.

Functions form the basis of serverless computing focusing on the execution of a specific operation. They are essentially small software programmes that usually run independently of any operating system or other execution environment and can be deployed on the cloud infrastructure triggered by an external event such as, (a) change in a cloud database (uploading or downloading of files), or (b) a new message or an action scheduled at a pre-determined time, or (c) direct request from the application triggered through API.

There could be several short functions running in parallel independent of each other and managed by the cloud provider. Examples of events that trigger the functions can also include an interface request that the cloud provider is managing for the customer for which the function could be written by the developer to handle that certain event.

In serverless computing, functions are hosted in an underlying cloud infrastructure that provides monitoring and logging as well as automatic provisioning of resources such as storage, memory, CPU, and scaling to adjust to varying functional loads. Developers solely focus on providing executable code as mandated by the serverless computing framework and have the flexibility to work with multiple programming languages such as Node.js, Java, and Python that can interface with AWS Lambda and Google Cloud Function, respectively [11], [12].

However, developers are completely at the mercy of the execution environment, underlying operating system, and runtime libraries but have the freedom to upload executable code in the required format and use custom libraries along with the associated package managers. The fundamental difference between functions and Virtual Machines (VMs) in IaaS cloud architecture is that the users using VMs have full control over the OS, including root access, and can customise the execution environment to suit their requirements while developers using functions are not burdened with the cumbersome tasks of configuring, maintaining, and managing server resources.

Functions essentially serve individual tasks and are typically short-lived till execution, unlike the long-running, permanent, and stateful services. Hence, functions are more suitable for high throughput computations consisting of fine granular tasks, with serverless computing offering cost-effective solutions compared to VMs [11], [12], [13].

Recently, novel architectural concepts have been proposed that virtualise IoT/IoV common service functions to provide services at the edge nodes close to the end-users. Further, studies indicate the benefit of edge computing through the offering of virtualised IoT services at the same edge node with a 5G network slice to achieve higher throughput and lower latency and reduce RTT by half compared to the earlier concept.

References

[1]. K.L. Leuth, the State of IoT in 2018: Number of IoT Devices now at 7B – Market Accelerating, Aug 8, 2018. https://iot-analytics.com/state-of-the-iot-update-q1-q2-2018-number-of-iot-devices-now-7b/

[2]. Datatronic, How does containerization help the automotive industry? What are the advantages of Kubernetes rides shotgun? https://datatronic.hu/en/containerisation-in-automotive-industry/

[3]. S. Rac and M. Brorsson, At the Edge of a Seamless Cloud Experience, https://arxiv.org/pdf/2111.06157.pdf

[4]. J. Napieralla, Considering Web Assembly Containers for Edge Computing on Hardware-Constrained IoT Devices, M.S. Thesis, Computer Science, Blekinge Institute of Technology, Karlskrona, Sweden (2020).

[5]. B. I. Ismail, E.M. Goortani, M.B. Ab Karim, et.al., Evaluation of Docker as Edge computing platform, 2015 IEEE Conference on Open Systems (ICOS), 2015, pp. 130-135, https://doi.org/10.1109/ICOS.2015.7377291

[6]. A. Ksentini and P. A. Frangoudis, Toward Slicing-Enabled Multi-Access Edge Computing in 5G, IEEE Network, vol. 34, no. 2, pp. 99-105, (2020), https://doi.org/10.1109/MNET.001.1900261.

[7]. L. Tang, B. Tang, et al. Joint Optimization of network selection and task offloading for vehicular edge computing, Journal of Cloud Computing: Advances, Systems and Applications (2021)https://doi.org/10.1186/s13677-021-00240-y

[8]. H. Zhou, H. Wang, X. Chen, et.al, Data offloading techniques through vehicular Ad Hoc networks: A survey, IEEE Access, vol. 6, pp. 65250-65259 (2018), https://doi.org/10.1109/ACCESS.2018.2878552.

[9]. H. -W. Deng, M. Rahman, M. Chowdhury, M. S. Salek and M. Shue, Commercial Cloud Computing for Connected Vehicle Applications in Transportation Cyberphysical Systems: A Case Study, IEEE Intelligent Transportation Systems Magazine, vol. 13, no. 1, pp. 6-19, (2021), https://doi.org/10.1109/MITS.2020.3037314.

[10]. A. Wang, Z. Zha, Y. Gui, and S. Chen, Software-Defined Networking Enhanced Computing: A Network-Centric Survey, Proceedings of the IEEE, vol. 107, no. 8, pp. 1500-1519, (2019), https://doi.org/10.1109/JPROC.2019.2924377

[11]. M. Malawski, A. Gajek, A. Zima, B. Balis, K. Figiela, Serverless execution of scientific workflows: Experiments with Hyperflow, AWS Lambda, and Google Cloud Functions, Future Generation Computer Systems, Science Direct, vol. 110, no. 8, pp. 502-514, (2020), https://doi.org/10.1016/j.future.2017.10.029

[12]. L. F. Herrera-Quintero, J. C. Vega-Alfonso, K. B. A. Banse and E. Carrillo Zambrano, Smart ITS Sensor for the Transportation Planning Based on IoT Approaches Using Serverless and Microservices Architecture, IEEE Intelligent Transportation Systems Magazine, vol. 10, no. 2, pp. 17-27 (2018), https://doi.org/10.1109/MITS.2018.2806620.

[13]. S.V. Gogouvitis, H. Mueller, S. Premnadh, A. Seitz, B. Bruegge, Seamless computing in industrial systems using container orchestration, Future Generation Computer Systems (2018), https://doi.org/10.1016/j.future.2018.07.033

[14]. J.Y. Hwang, L. Nkenyereye, N.M. Sung, et.al., IoT service slicing and task offloading for edge computing, IEEE Journal, 44, 4, (2020).

About the Author: Dr Arunkumar M Sampath heads Electric Vehicle projects at Tata Consultancy Services (TCS) in Chennai. His interests include hybrid and electric vehicles, connected and autonomous vehicles, cybersecurity, extreme fast charging, functional safety, advanced air mobility (AAM), AI, ML, data analytics, and data monetisation strategies.