Read: Towards Seamless Edge Computing in Connected Vehicles – Part 1

Serverless Computing – Scalability

In a real-world dynamic situation of connected vehicles with peak and lean periods of traffic and changing road and weather conditions, the associated cyber-physical system (CPS) must also scale automatically. Even though serverless cloud computing is known to be automatically scalable, limitations are introduced by the underlying commercial cloud service providers (e.g., AWS, Azure, Google Cloud) who set upper limits on memory usage, limiting the support for a large-scale CPS in real-time.

To overcome this, a concept is proposed wherein the functions handling traffic and connected vehicles operate in parallel to improve the throughput so a large amount of data could be handled without compromising on the latency requirements [11], [12].

IoT Function Modulisation Using Micro-Service, Virtualisation

One of the first steps toward offering IoT/IoV services through NS is the modulisation of the IoT/IoV platform. As per current practice, a centralised cloud service deploys almost all the IoT service functions such as device management, registration, and discovery. This poses a challenge for users expecting different types of IoT services, computational resources, and modularised IoT functions at the edge.

This can be addressed by dividing the IoT platform into multiple small components to modularise it and offer micro-services, which can be deployed, scaled, and tested, thus improving the efficiency and agility of services and functions. The modulised IoT functions thus provide a flexible and agile development environment by independently deploying software and reducing dependency on the centralised cloud.

Container Technologies

Recent developments have resulted in a container-based architecture, where packaged software in containers enables easier deployment to heterogeneous systems as a virtual OS and file system executing on top of the native system [11], [12]. Container technologies enable the code to be portable so it can run properly and independently irrespective of the hardware architecture or operative system structure on which it is running.

Containers thus allow developers to test the code on separate development machines and upon successful run can deploy it in the IoT platform to run with other functions. Containers provide multiple advantages including fast start-up time, low overhead, and good isolation because developers are required to build, compile, test, and deploy only once in the virtualised platform.

The virtualisation layer takes the responsibility of translating the actions inside the container for the underlying system, without burdening the developers. Further, the containers provide an isolated sandbox environment for the programmes residing in them. Hence, the developers find it easier to work with containers that can be constrained relatively easily than programmes running on the native platform. Also, because a container is essentially an isolated sandbox, any spurious software or malware that may have affected the container may not impact the rest of the applications.

In an application or a software stack, we can have an overseeing container orchestration framework that controls multiple containers (sandboxes) running in parallel without one container affecting the other and can easily deploy and manage large container-based software stacks. Though a sandbox running software in containers involves virtualisation and isolation, the drawback is it reduces the performance due to additional software overhead, which may not be a hindrance on high-performance computing (HPC) machines but may impact IoT devices with edge computing.

Still, containers with virtualisation of multiple platforms and services, are a preferred choice among software developers with Docker and WebAssembly (Wasm) finding increasing acceptance by a large SW community to implement micro-services. Though Docker is currently the dominant container technology, its shortcomings include large and complicated systems and limitations while running on hardware-constrained IoT devices.

WebAssembly containers, though relatively new, appear to be a promising solution for running small lightweight containers and are better suited for hardware-constrained IoT devices in edge computing with simpler runtime. Additionally, many SW developers across the world using 5G networks acknowledge Kubernetes as an open-source container orchestration system for efficient management of operational resources and scalability as per varying load demands [11], [12], [13].

Multiaccess Edge Computing Combined With NS & TO

As the number of IoT devices has been significantly increasing YoY, the European Telecommunications Standards Institute Multiaccess Edge Computing (ETSI MEC) industry specification group (ISG) has been working on standards development, use cases, architecture, and APIs to overcome the latency challenge and assure ultra-high reliability during real-time services in connected vehicles, smart cities, smart factories, etc.

ISG is also working on creating an open environment to provide a vendor-neutral MEC framework and defining the MEC APIs for IoT systems.

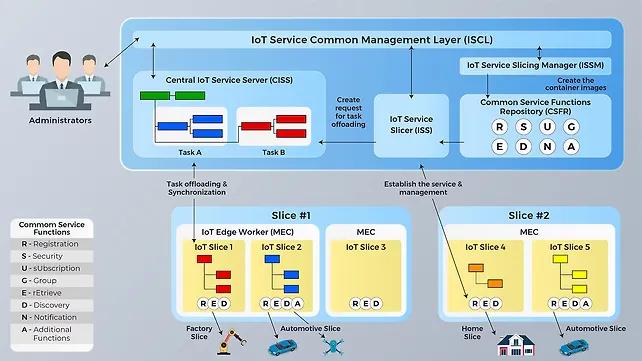

Figure 10 shows a reference architecture for IoT network slicing and task offloading [14]. As explained earlier, using the concept of network or service slicing, the common service functions of the IoT platform can be modularised into small micro-services and deployed at the edge nodes. Here, only the necessary IoT micro-services are selected as required to create a virtual IoT platform instance and deploy it towards the edge nodes.

This improves the efficiency as each IoT service slice is optimised for a specific use case and provides scalability in providing multiple IoT services as required. The IoT platform in Figure 10 [14] depicts a Resource-Oriented Architecture (ROA) wherein task offloading is carried out through the transfer of necessary IoT resources from the cloud to the edge nodes for executing the requested service.

As shown in Figure 10 [14], there are multiple service requests (Home, Automotive, Drone, Factory, etc.) that may need low latency. The IoT platform checks as to which IoT common service functions are required to satisfy the requests from different IoT devices and accordingly assigns an IoT slice that contains the required micro common IoT service functions.

The IoT platform then checks the availability of MEC nodes around the specific IoT device and selects one MEC node to host the instantiated IoT slice. Next, the IoT platform offloads the resources to the MEC node to execute the service requested by the IoT device, thus, fulfilling both IoT NS and TO.

For the effective realisation of MEC and offering of services at the edge nodes, it is imperative to carry out NS and TO as well as data transfer & processing and containerisation of IoT functions at the edge nodes. A task is identified as a set of resources representing physical infrastructure, sensors, RSUs, etc. with associated data. Upon identification of a suitable edge node to serve a sliced IoT platform, task offloading must be carried out to place the relevant resources required to support the service at the edge node.

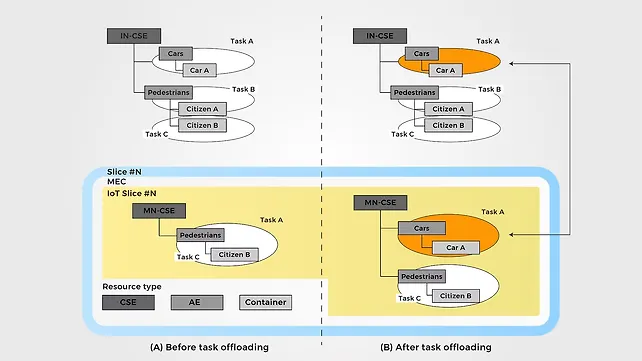

Figure 11 [14] shows a use case of connected vehicles operating in a smart city wherein messages about pedestrians crossing a crosswalk are passed onto the cars reaching the intersection by comparing two scenarios, before and after task offloading. In Figure 11 (a), task A (car A) and task B (pedestrian citizen A) are assumed to be running on the central IoT cloud platform, while task C (pedestrian citizen B) is assumed to be running the edge node. Pedestrian citizen B will experience edge-based IoT services.

To meet specific latency requirements, it is imperative for the central IoT cloud to offload the tasks from the cloud to the edge gateway, with resource reorientation containing CSE (Common Service Entity), AE (Application Entity), and Container changed to the structure as shown in Figure 11 (b). Now, car A can have faster IoT services than in the earlier case due to reduced latency. Similar use cases for connected vehicles involving micro-services at the edge nodes need to be prepared, as per the guidelines given for MEC by ETSI.

IoT Service Slicing Based On Micro-Services

It is generally believed that if the edge nodes have enough CPU and memory resources then the deployment of an IoT service platform that supports all common service functions at the edge nodes is relatively easy. The challenge is in providing granular IoT services at the edge nodes with limited CPU and memory resources using slicing and task offloading based on micro-services.

The advantages of a micro-services-based IoT platform include, (a) less complexity to reboot the system, especially the micro-services at the edge nodes in case of unexpected errors, (b) no subscription or notification functionalities in hardware for seemingly simple tasks at the edge nodes such as temperature or voltage measurement, and (c) no stringent latency requirements for some IoT services such as smart homes [14].

Though different IoT services have different latency and computation requirements, they can be operated on the same edge nodes. To ensure the highest Quality of Experience (QoE) to the end-users the IoT service must be designed to dynamically handle the varying resource requirements (latency and computation) at the edge nodes against assigning similar resources for seemingly simple tasks, leading to inefficiencies.

IoT Service With Inherent Trust And Security Using Blockchain

In the IoT platform offering micro-services, in line with the Blockchain framework that uses a decentralised public ledger, the data is stored at the edge nodes on the network in a block structure logically connected to each other based on the hash value. To prevent and protect against security breaches, data forgery, data alterations, and cyberattacks that may compromise data and privacy, the entire network is populated with these data blocks, which are copied and shared along with the blockchain system [14].

A MEC framework with NS and TO may experience multiple security challenges due to, (a) heterogeneous data transfer across different IoT stakeholders and organisations, (b) unauthorised access to private data, and (c) data replication or unauthorised publishing of data. The heterogeneous data transfer is handled through transparent and secure collaboration with other servers or applications.

The issues of data privacy and duplication or unauthorised publication arise due to the separation of ownership and control of data and the outsourcing of complete or partial responsibility when data gets transferred between edge nodes or the data centre in the cloud. Any compromised data poses challenges due to highly changing dynamic situations at the IoT nodes and the openness associated with edge computing, posing serious compromises to data integrity, or allowing hackers to access/modify the data.

These issues can be resolved using the blockchain technology that connects MEC instances together with the IoT platform to increase the trust among IoT service slices.

Certain IoT platforms with NS and Blockchain support a resource to control access rights, which in turn allows storing the authentication key value generated internally to be used for device authentication. Before an IoT platform responds to a service request, it checks the authentication key value for a match with the stored values.

However, the IoT platform still needs to verify the reliability of data exchanged among the edge nodes and ensure it is not tampered with or compromised on privacy or published without proper authorisation. In this regard, an IoT device that utilises the services from the edge nodes or data on the IoT device or cloud interface needs to carry out a synchronisation mechanism and data blockchain using blockchain. .

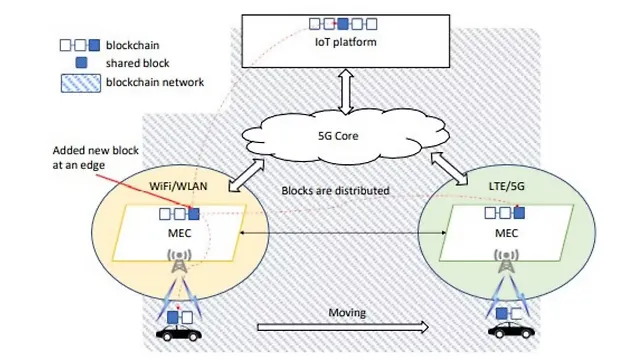

As shown in Figure 12, the edge computing architecture can offer more secure and trustworthy services by incorporating blockchain into the edge nodes, where data are processed locally. As the IoT devices, edge nodes, service instances, and the cloud are connected within the same blockchain network, trustworthy data transfer and information exchange are assured by a consensus mechanism used in blockchain technology.

The MEC-enabled blockchain for IoT slicing and task offloading shown in Figure 12 supports information and data storage and distribution to the connected blockchain entities.

Conclusion

With a multifold increase in the number of IoT devices, supported by advances in IoT technology, numerous value-added services are being offered as new possibilities. New technologies such as MEC along with NS & TO enhance the possibilities for IoT to offer further advanced services in real-time.

As the launch of 5G networks has opened new avenues of use cases and business opportunities by offering low latency and ultra-high reliability among other advantages, the conventional IoT service platforms in the cloud may still fall short of providing real-time services efficiently with billions of IoT devices.

Comparisons and advantages between server-cloud and serverless cloud computing have been provided followed by a detailed explanation of container technologies and the concept of IoT service slicing based on micro-services. Recent studies have extended the reference architecture of MEC to include containerisation toward serverless edge computing successfully combining NS & TO in the form of improved latency. This has been further enhanced through blockchain at the edge nodes, IoT devices, and service instances using which ensures trustworthy data transfer and information exchange.

Future Work

One of the aspects of future work would be the optimisation of Quality of Service (QoS) in terms of latency, security, and range of services at the IoT edges, while taking into consideration the network availability, network slicing, and task offloading. Future research should also evaluate the relative performance of the serverless and server-based cloud architecture for connected vehicle applications in terms of cost, reliability, RTT, and security in a real-world scenario in different parts of the world, as per 3GPP and ETSI recommended use cases.

Another potential opportunity for future research includes a comparison of different commercial cloud service providers such as Microsoft Azure, GCP, and AWS while applying different use cases on connected vehicle applications in real-world traffic and driving conditions. The use case evaluation on connected vehicles should also include exclusive tests on cybersecurity in connected vehicle applications with serverless computing at the edge nodes such as identity protection, data privacy, authentication, data integrity, unauthorised data publishing, etc.

Future work may also include variability of network connections through drones or unmanned aerial vehicles in remote areas to study the impact on QoS of connected vehicles especially augmenting Advanced Driver Assistance Systems (ADAS) such as collision avoidance, and frontal collision warning, lane departure warning, etc.

The dynamic management of containers by using AI technologies and comparison of virtualisation of micro-services at the edge nodes based on effective containerisation is also another potential area of future research.

References

[11]. M. Malawski, A. Gajek, A. Zima, B. Balis, K. Figiela, Serverless execution of scientific workflows: Experiments with Hyperflow, AWS Lambda, and Google Cloud Functions, Future Generation Computer Systems, Science Direct, vol. 110, no. 8, pp. 502-514, (2020), https://doi.org/10.1016/j.future.2017.10.029

[12]. L. F. Herrera-Quintero, J. C. Vega-Alfonso, K. B. A. Banse and E. Carrillo Zambrano, Smart ITS Sensor for the Transportation Planning Based on IoT Approaches Using Serverless and Microservices Architecture, IEEE Intelligent Transportation Systems Magazine, vol. 10, no. 2, pp. 17-27 (2018), https://doi.org/10.1109/MITS.2018.2806620.

[13]. S.V. Gogouvitis, H. Mueller, S. Premnadh, A. Seitz, B. Bruegge, Seamless computing in industrial systems using container orchestration, Future Generation Computer Systems (2018), https://doi.org/10.1016/j.future.2018.07.033

[14]. J.Y. Hwang, L. Nkenyereye, N.M. Sung, et.al., IoT service slicing and task offloading for edge computing, IEEE Journal, 44, 4, (2020).

About the Author:Dr Arunkumar M Sampath heads Electric Vehicle projects at Tata Consultancy Services (TCS) in Chennai. His interests include hybrid and electric vehicles, connected and autonomous vehicles, cybersecurity, extreme fast charging, functional safety, advanced air mobility (AAM), AI, ML, data analytics, and data monetisation strategies.